HBase is a column-oriented database management system that runs on top of HDFS. It is well suited for sparse data sets, which are common in many big data use cases. HBase is used to access data quickly, providing random, real-time, read/write access to large data sets. In HBase, data model columns are grouped into column families which are stored together on disk. Generally, try to use only three column families or less per table. Group similar data together in one column family. For example, data collected from Amazon could go into one column family, while data collected from Target into a second column family.

Configure HBase

Install HBase

HBase requires ZooKeeper and HDFS. The HBase RegionServer can coexist with YARN ResourceManagers or MapReduce TaskTrackers. However, ZooKeeper and HBase RegionServers should be located on different servers. Cloudera Manager distributes HBase in CDH and offers the following services:

- Master – an HBase Master node manages the cluster. Try to run an HBase Master on one of the master nodes. A second Master Service will act as a backup master – to setup an HBase Master active/backup, simply select another Master. No other configuration is necessary because ZooKeeper will keep a record of the Master and handle the election process between the Masters. Try not to install an HBase Master service on the same node as a YARN ResourceManager.

- Gateway – add to the Application, Flume, and the HBase Master nodes. In CDH, the HBase Gateway collects HBase configurations and is used to collocate the configs for use by other services.

- RegionServer – (should be located on a different server from ZooKeeper) – in CM, by default, a RegionServer is installed onto ALL nodes, however this is not the best configuration. You should not install a RegionServer on a host that also has a ZooKeeper service or an HBase Master service installed. RegionServers store portions of the tables and perform the work on the data.

- HBase REST Server (not required). The HBase REST Server uses HTTP verbs to perform an action, giving developers a wide choice of languages and programs to use. Only one HBase REST server is required. On large clusters, run the HBase REST server on a separate server from the HBase Master or HBase RegionServers.

- HBase Thrift Server (if you are using Hue, this is required – place onto the same server that runs the Hue Server). The HBase Thrift Server is a lightweight HBase interface. Only one HBase Thrift server is required. On large clusters, run the HBase Thrift server on a separate server from the HBase Master or HBase RegionServers.

HBase Configuration

Some example host size configurations.

Reference: https://bighadoop.wordpress.com/tag/hbase/, and http://hbase.apache.org/book.html

For memory configuration: http://hadoop-hbase.blogspot.com/2013/01/hbase-region-server-memory-sizing.html

Configure HBase High Availability

Configuring high availability in HBase is as easy as adding another HBase Master to the cluster – ZooKeeper and HBase will work together to maintain active and standby state. Running two HBase Master Services will automatically set one as active and one as backup. So you just simply add another HBase Master to any other node. HBase will identify one as the active and one as a backup.

On a cluster with more than one node, it is usually best to add the HBase Master to one of the master nodes.

Reference: Guide to Using Apache HBase Ports

Scaling and HA: How Scaling Really Works in Apache HBase

Configure Replication

Configure HBase Replication

Reference: HBase Replication

Configure HBase Snapshots

About HBase Snapshots

In previous HBase releases, the only way to a backup or to clone a table was to use Copy Table or Export Table, or to copy all the hfiles in HDFS after disabling the table. The disadvantages of these methods are:

- Copy Table and Export Table can degrade region server performance.

- Disabling the table means no reads or writes; this is usually unacceptable.

HBase Snapshots allow you to clone a table without making data copies, and with minimal impact on Region Servers. Exporting the table to another cluster should not have any impact on the the region servers.

Reference: Configuring HBase Snapshots

Administer HBase

HBase Commands

HBase shell

Enter the HBase shell for cli commands:

hbase shell

List Tables

List all tables, or a single table:

hbase shell list list 'test_table_1' quit()

Get Row

Get a row from an HBase table, display 2 columns and convert the columns from bytes to Double and Integers:

get 'some-table','589:5431:5701:2015-12-27:19', {COLUMN => ['cf:T:toDouble','cf:Q:toInt'] }

To see the specific rowkey

get 'some-table','rowkey'

Create a Table

Create a table in HBase with three column families (A, W, S):

create 'test_table_1','A','W','S' put 'test_table_1', 'row1', 'A', 'value1' put 'test_table_1', 'row2', 'A', 'value2' put 'test_table_1', 'row3', 'A', 'value3' put 'test_table_1', 'row1', 'W', 'value1' put 'test_table_1', 'row2', 'W', 'value2' scan 'test_table_1' describe 'test_table_1'

Table creation script that is more aligned with how I run in production, including a split distribution between 0 and 1,000. Note the compression and block encoding:

create 'test-table', {NAME => 'cf', DATA_BLOCK_ENCODING => 'FAST_DIFF', COMPRESSION => 'LZO'}, {SPLITS => ['0011', '0088', '0131', '0178', '0225', '0277', '0326', '0377', '0421', '0467', '0512', '0550', '0589', '0628', '0667', '0705', '0745', '0783', '0822', '0866', '0909', '0950', '0991']}

Delete Rows

Deleting a Specific Cell for one Row

Using the delete command, you can delete a specific cell in a table. The syntax of delete command is as follows:

delete ‘<table name>’, ‘<rowkey>’, ‘<column name >’, ‘<time stamp>’

Example

Here is an example to delete a specific cell:

delete 'some-table-1', '1', 'personal data:city',1417521848375

Delete All Cells for one Row

Delete all cells in a given row; pass a table name, row, and optionally a column and timestamp. Using the “deleteall” command, you can delete all the cells in a row. Given below is the syntax of deleteall command.

deleteall ‘<table name>’, ‘<rowkey>’,

Example

Here is an example of “deleteall” command, where we are deleting all the cells of row1 of emp table.

deleteall 'employee','1'

Drop a Table

To delete a table in HBase, you must first disable the table, then drop.

disable 'some-table-1' drop 'some-table-1'

For more than one table:

Delete more than one table in Hbase using disable_all and drop_all. These commands are used to drop the tables matching the “regex” given in the command. Its syntax is as follows:

disable_all 'TEST.*' drop_all 'TEST.*' # or for ALL TABLES: # warning: do not run this command in PROD # last warning: this is dangerous: disable_all '.*' drop_all '.*'

Note: BE CAREFUL, there is no going back from this command, do NOT drop all tables in PROD:

Count

Count the number of rows, on a SMALL table, this is efficient (the default interval is 1,000 rows):

count&amp;amp;amp;amp;amp;amp;nbsp;'some_table_1'

To change the interval (the number of rows counted before being displayed on the screen) – the default is 1,000 rows:

count ‘some_table_1’, INTERVAL =&amp;amp;amp;amp;amp;amp;gt; 100000

Count the number of rows, for a VERY LARGE table, use MapReduce:

/opt/cloudera/parcels/CDH/bin/hbase org.apache.hadoop.hbase.mapreduce.RowCounter -Dhbase.client.scanner.caching=10000 'test_table_1'

Limit

Limit the query results with LIMIT, for example:

scan ‘some_table_1’, {COLUMN => [‘cf:T:toDouble’,’cf:Q:toInt’], STARTROW => ‘588’, ENDROW => ‘589’, ‘LIMIT’ => 5}

Filter

Filter results from a scan or get for debug purposes:

Add functions to use in your search:

hbase shell import org.apache.hadoop.hbase.filter.CompareFilter import org.apache.hadoop.hbase.filter.SingleColumnValueFilter import org.apache.hadoop.hbase.filter.SubstringComparator import org.apache.hadoop.hbase.util.Bytes import org.apache.hadoop.hbase.filter.QualifierFilter import org.apache.hadoop.hbase.filter.BinaryComparator

scan 'users', { FILTER =&amp;amp;gt; SingleColumnValueFilter.new(Bytes.toBytes('cf'), Bytes.toBytes('name'), CompareFilter::CompareOp.valueOf('EQUAL'), BinaryComparator.new(Bytes.toBytes('abc')))}

Filter for an integer that is less than a comparison number:

scan 'some_table_1', {COLUMN =&amp;amp;gt; ['cf:T:toDouble','cf:Q:toInt'], FILTER =&amp;amp;gt; SingleColumnValueFilter.new(Bytes.toBytes('cf'),Bytes.toBytes('T'),CompareFilter::CompareOp.valueOf('LESS'), BinaryComparator.new(Bytes.toBytes('0'))), STARTROW =&amp;amp;gt;&amp;amp;nbsp;'588', ENDROW =&amp;amp;gt;&amp;amp;nbsp;'589',&amp;amp;nbsp;'LIMIT'&amp;amp;nbsp;=&amp;amp;gt; 5000}

Filter for a string using SubstringComparator to search for “2017-08-16” within the rowkey:

hbase shell

import&amp;nbsp;org.apache.hadoop.hbase.filter.CompareFilter

import&amp;nbsp;org.apache.hadoop.hbase.filter.SubstringComparator

scan&amp;nbsp;'some_table_2',{LIMIT=&amp;gt;500,TIMERANGE=&amp;gt;[1502866800000,1503385200000],FILTER =&amp;gt; org.apache.hadoop.hbase.filter.RowFilter.new(CompareFilter::CompareOp.valueOf('EQUAL'),SubstringComparator.new("2017-08-16"))}

Prefix Filter

Using a PrefixFilter can be slow because it performs a table scan until it reaches the prefix. A more performant solution is to use a STARTROW and a FILTER like so:

scan ‘some_table_1′, { LIMIT => 100,STARTROW => ’40:5477:64220555046′, FILTER => “PrefixFilter(’40:5477:64220555046’)” }

scan ‘some_table_1’, { LIMIT => 10,STARTROW => ‘1:121:5210000780:9298101:20160105’, FILTER => “PrefixFilter(‘1:121:5210000780:9298101:’)” }

TIMERANGE Filter

For a very fast scan with a filter, use the TIMERANGE filter, which will grab data from a time range:

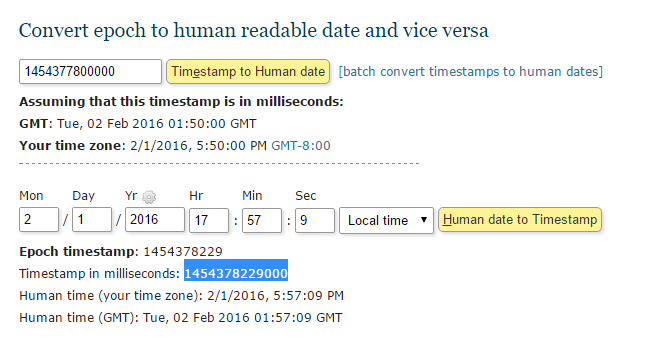

scan ‘some_table_1’,{ LIMIT => 100,TIMERANGE => [1454377800000,1454379000000] }

Get the timestamp using the epochconverter, change to Local Time, and enter a date/time:

http://www.epochconverter.com/

Rename Table

To rename an HBase table you take a copy and delete the old table:

disable ‘tableName’

snapshot ‘tableName’, ‘tableSnapshot’

clone_snapshot ‘tableSnapshot’, ‘newTableName’

delete_snapshot ‘tableSnapshot’

drop ‘tableName’

Copy Table

Copy the table to the same cluster

snapshot ‘TableName’,’TabeName-snapshot’

clone_snapshot ‘TableName-snapshot’,’NewTableName’

See the snapshots:

list_snapshots

Move

Move a region. Optionally specify target regionserver else we choose one at random.

NOTE: You pass the encoded region name, not the region name so this command is a little different to the others. The encoded region name is the hash suffix on region names: e.g. if the region name were TestTable,711:2969:14272:2015-12-27:17,1451413644394.97952facce48f92bb55d1171f41a3152., then the encoded region name portion is 97952facce48f92bb55d1171f41a3152

For example:

move ‘97952facce48f92bb55d1171f41a3152’

A server name is its host, port plus startcode.

For example (from the http://servername01:60010/master-status):

servername.example.corp,60020,1450160080693

Examples:

hbase> move ‘ENCODED_REGIONNAME’

hbase> move ‘ENCODED_REGIONNAME’, ‘SERVER_NAME’

Split

Split entire table or pass a region to split individual region. With the second parameter, you can specify an explicit split key for the region.

Examples:

&amp;lt;div class="oracle11"&amp;gt;split&amp;nbsp;&amp;lt;span class="st0"&amp;gt;'tableName'&amp;lt;/span&amp;gt; split&amp;nbsp;&amp;lt;span class="st0"&amp;gt;'regionName'&amp;lt;/span&amp;gt;&amp;nbsp;# format:&amp;nbsp;&amp;lt;span class="st0"&amp;gt;'tableName,startKey,id'&amp;lt;/span&amp;gt; split&amp;nbsp;&amp;lt;span class="st0"&amp;gt;'tableName'&amp;lt;/span&amp;gt;&amp;lt;span class="sy0"&amp;gt;,&amp;lt;/span&amp;gt;&amp;nbsp;&amp;lt;span class="st0"&amp;gt;'splitKey'&amp;lt;/span&amp;gt; split&amp;nbsp;&amp;lt;span class="st0"&amp;gt;'regionName'&amp;lt;/span&amp;gt;&amp;lt;span class="sy0"&amp;gt;,&amp;lt;/span&amp;gt;&amp;nbsp;&amp;lt;span class="st0"&amp;gt;'splitKey'&amp;lt;/span&amp;gt;

Output Results to File

To output the HBase scan results to a file, you can pipe the results of a command to a file:

echo"scan 'foo'"| hbase shell &amp;gt; ~/myText.txt

Another option using an EOF here doc, potentially more customizable:

hbase shell &amp;lt;&amp;lt;EOF &amp;gt;~/myText.txt scan&amp;nbsp;'foo' EOF

Merge Regions

To complete an online merge of two regions of a table, use the HBase shell to issue the online merge command. By default, both regions to be merged should be neighbors; that is, one end key of a region should be the start key of the other region. Although you can “force merge” any two regions of the same table, this can create overlaps and is not recommended.

The Master and RegionServer both participate in online merges. When the request to merge is sent to the Master, the Master moves the regions to be merged to the same RegionServer, usually the one where the region with the higher load resides. The Master then requests the RegionServer to merge the two regions. The RegionServer processes this request locally. Once the two regions are merged, the new region will be online and available for server requests, and the old regions are taken offline.

For merging two consecutive regions use the following command:

hbase> merge_region 'ENCODED_REGIONNAME', 'ENCODED_REGIONNAME'

For merging regions that are not adjacent, passing true as the third parameter forces the merge.

hbase> merge_region 'ENCODED_REGIONNAME', 'ENCODED_REGIONNAME', true

Note: This command is slightly different from other region operations. You must pass the encoded region name (ENCODED_REGIONNAME), not the full region name . The encoded region name is the hash suffix on region names. For example, if the region name is TestTable,0094429456,1289497600452.527db22f95c8a9e0116f0cc13c680396, the encoded region name portion is 527db22f95c8a9e0116f0cc13c680396.

Script the Merge Commands

To merge regions that are close by, copy all the regions in a file.

Divide alternate regions into 2 files

awk'NR % 2 == 0'inventory_regions &amp;gt; file1 awk'NR % 2 != 0'inventory_regions &amp;gt; file2

Loop over the files and merge the corresponding regions

#!/bin/sh exec3&amp;lt;"file1" exec4&amp;lt;"file2" whileIFS=&amp;nbsp;read-r line1 &amp;lt;&amp;amp;3 &amp;nbsp;&amp;nbsp;&amp;nbsp;IFS=&amp;nbsp;read-r line2 &amp;lt;&amp;amp;4 &amp;nbsp;&amp;nbsp;&amp;nbsp;do &amp;nbsp;&amp;nbsp;&amp;nbsp;&amp;nbsp;&amp;nbsp;&amp;nbsp;echo"merge_region '$line1','$line2'"| hbase shell &amp;nbsp;&amp;nbsp;&amp;nbsp;done

Add a TTL to an Existing Table

To apply a TTL to an existing table, you need to run an alter table command, and then run a major compact to set the change:

#set the TTL for 2 years (I added 2 days for a buffer = 366 days per year): alter&amp;nbsp;'some_table1', NAME=&amp;gt;'cf', TTL=&amp;gt;63244800 #validate the change: describe&amp;nbsp;'some_table1' #run a major compact to set the change: major_compact&amp;nbsp;'some_table1'

Repair HBase Table

Fix any inconsistencies with HBase

sudo -u hbase hbase hbck

sudo -u hbase hbase hbck -fix

Example results:

Number of Tables: 5

Number of live region servers: 1

Number of dead region servers: 0

Master: servername01,60000,1402597488665

Number of backup masters: 0

Number of empty REGIONINFO_QUALIFIER rows in .META.: 0

Summary:

-ROOT- is okay.

Number of regions: 1

Deployed on: servername01,60020,1402597096844

.META. is okay.

Number of regions: 1

Deployed on: servername01,60020,1402597096844

TestTable is okay.

Number of regions: 1

Deployed on: servername01,60020,1402597096844

Note: See more examples in the Troubleshooting section below.

Balancer

Balance Regions across RegionServers. If regions are in transition or if there are any inconsistencies this will fail. The process normally runs automatically every hour or so. Will return true or false.

hbase shell

balancer

Note: if the balancer has been disabled due to previous region errors you may have to re-enable the balancer.

Enable/Disable balancer. Returns previous balancer state. Examples:

Bulkedit and row count using Mapreduce

To bulkedit

hbase org.apache.hadoop.hbase.mapreduce.ImportTsv -Dimporttsv.columns=a,b,c &lt;tablename&gt; &lt;hdfs-inputdir&gt;

Example:

hbase org.apache.hadoop.hbase.mapreduce.ImportTsv -Dimporttsv.columns="HBASE_ROW_KEY,a:c1" -Dimporttsv.separator="," perf5 /tmp/10000000.txt;date

Rowcount:

hbase org.apache.hadoop.hbase.mapreduce.RowCounter &lt;tablename&gt;

Move Files to HBase Using Pig

From the command line:

pig -param YourParameterName1=YourValue1 YourPigScript.pig

To run against Hbase, you usually hand in the Zookeeper server as a parameter.

pig –param Zookeeper=zk.servername01 YourPigScript.pig

Then in your pig script, you would reference the variable like so:

set hbase.zookeeper.quorum ‘$Zookeeper’

and use the $Zookeeper variable on any Load or Store commands as needed.

Load CSV file into HBase Table

- The sample data.csv as shown below where each filed in a row is separated by a comma.

row1,c1,c2

row2,c1,c2

row3,c1,c2

row4,c1,c2

row5,c1,c2

row6,c1,c2

row7,c1,c2

row8,c1,c2

row9,c1,c2

row10,c1,c2

row11,c1,c2

row12,c1,c2

2. Copy the data.csv file to the Linux VM. (Use Hue is an easy way to do this). I copied this to /tmp/data.csv

3. Connect the Linux using putty

4. Create the table from HBase shell.

$ hbase shell

Then run the following from Hbase shell to create the table ‘t1’ with 3 splits.

hbase(main):008:0> create ‘t1’, {NAME => ‘cf1’}, {SPLITS => [‘row5’, ‘row9’]}

CTRL + C

5. Upload the data from TSV format in HDFS into HBase via Puts

hbase org.apache.hadoop.hbase.mapreduce.ImportTsv -Dimporttsv.columns=”HBASE_ROW_KEY,cf1:c1,cf1:c2″ -Dimporttsv.separator=”,” t1 /tmp/data.csv

6. To verify that the data is uploaded open HBase shell again and run the following.

$ hbase shell

scan ‘t1’

Copy a Live HBase Table to Another Cluster

CopyTable Definition

CopyTable is a utility that can copy part or of all of a table, either to the same cluster or another cluster. The target table must first exist. It would not be hard to use CopyTable as a way to replicate or backup an existing HBase cluster into a disaster recovery site – use a sliding window to go after the newest data.

The usage is as follows:

$ ./bin/hbase org.apache.hadoop.hbase.mapreduce.CopyTable –help

/bin/hbase org.apache.hadoop.hbase.mapreduce.CopyTable --help

Usage: CopyTable [general options] [--starttime=X] [--endtime=Y] [--new.name=NEW] [--peer.adr=ADR] <tablename>

Options:

rs.class hbase.regionserver.class of the peer cluster,

specify if different from current cluster

rs.impl hbase.regionserver.impl of the peer cluster,

startrow the start row

stoprow the stop row

starttime beginning of the time range (unixtime in millis)

without endtime means from starttime to forever

endtime end of the time range. Ignored if no starttime specified.

versions number of cell versions to copy

new.name new table's name

peer.adr Address of the peer cluster given in the format

hbase.zookeeer.quorum:hbase.zookeeper.client.port:zookeeper.znode.parent

families comma-separated list of families to copy

To copy from cf1 to cf2, give sourceCfName:destCfName.

To keep the same name, just give "cfName"

all.cells also copy delete markers and deleted cells

Args:

tablename Name of the table to copy

Examples:

To copy 'TestTable' to a cluster that uses replication for a 1 hour window:

$ bin/hbase org.apache.hadoop.hbase.mapreduce.CopyTable --starttime=1265875194289 --endtime=1265878794289 --peer.adr=server1,server2,server3:2181:/hbase --families=myOldCf:myNewCf,cf2,cf3 TestTable

For performance consider the following general options:

It is recommended that you set the following to >=100. A higher value uses more memory but

decreases the round trip time to the server and may increase performance.

-Dhbase.client.scanner.caching=100

The following should always be set to false, to prevent writing data twice, which may produce

inaccurate results.

-Dmapred.map.tasks.speculative.execution=false

CopyTable Example

Use this in the event that you want to copy the contents of a table in a live instance of HBase into another HBase cluster.

I’m kind of in a rush to get this stuff down, so there are probably some assumption gaps here. We should take another pass over this and revise where appropriate. Screenshots would be very nice to have. My kingdom for a tech writer.

The example that I’ll use here is that of copying the SOMETABLE1 table from servername01 into another HBase cluster (destinationservername02).

Here is the procedure to copy a table from one cluster to another:

1. Make sure that your target instance is routable from your originating instance.

2. Make sure that you have permissions to run MR jobs. You’ll need to run this job under a user account that has a directory under /user (e.g. /user/hdfs). Note that we appear to be using simple authentication for Hadoop, so for the sake of permissions, all that matters is the username. This means that you can do things like this: sudo su hdfs -c “hbase <command>”

If you already have this table created in your target instance, skip the next few steps.

3. Obtain the table schema for your target table. You can do this from within HBase shell by running the ‘describe’ command: describe ‘SOMETABLE1’ Copy the entire contents into a text editor, and make sure that any errant line breaks are removed (this is common if you’re copying directly out of a terminal).

4. Each entry in the table schema structure (a single entry is surrounded by curly-braces) is broken into its own line. You’ll want to comma-delimit these instead, so they look something like: {<first column family stuff>},{<second column family stuff>},…

Yeah, it’s pretty incomprehensible. You might actually have to edit it to remove some stuff (see the next step).

5. Using the HBase shell (or your interface of choice), create a corresponding table in your target instance: create ‘FOO.SOMETABLE1’,{<first column family stuff>},{<second column family stuff>},…

Note: All you’re doing here is prepending the string “create ‘FOO.SOMETABLE1’,” to the table description spew from the last step.

Double Note: If HBase rejects your table definition, you may have to fiddle with some of the column family flags to accommodate disparate versions of HBase, or instances with slightly different configurations. In this case, I had to remove all instances of the TTL field, because my HBase instance didn’t understand its ‘FOREVER’ value.

6. From the originating cluster, run the CopyTables command.

My example command line:

sudo su hdfs -c "hbase -Dhbase.client.scanner.caching=100 -Dmapreduce.map.speculative=false org.apache.hadoop.hbase.mapreduce.CopyTable --peer.adr=destinationservername02:2181:/hbase --new.name=FOO.SOMETABLE1 SOMETABLE1"

Note that I’m copying into a test table called FOO.SOMETABLE1. I’m doing this because I don’t want a mistake to break the originating table. I’ve specified my own HBase instance correctly, but all it takes is one fat finger to cause a table to rewrite itself in the originating cluster, which could be a pretty serious hassle. In this case, the worst that will happen (we hope) is that we will accidentally write a new table in the originating cluster, rather than my target cluster, with the FOO name.

7. BE READY TO KILL THE JOB. If you need to kill the job, make sure you don’t accidentally kill someone else’s job, or Storm, which also runs under the YARN system. Keep an eye on the running job and its logs to make sure you’re not getting a pile of exceptions. If you see a pile of exceptions and nothing appears to fix itself, kill the job.

8. Depending on the size of the table, it’ll take a while before you see any mapper progress. Do yourself a favor and hit the Map Tasks monitoring page for the job, then refresh it occasionally to watch the counters increase. Note that the CopyTable job consists only of mappers, no reducers, so once your map tasks are complete, the job should finish.

Reference: http://www.cloudera.com/content/www/en-us/documentation/enterprise/latest/topics/admin_hbase_import.html

Troubleshooting

HBase Region is Not Online

Fix Hbase Region

We were not able to contact an HBase table using Phoenix. The jetty REST API log reported that the SYSTEM.CATALOG table was not online (org.apache.hadoop.hbase.NotServingRegionException: Region is not online: SYSTEM.CATALOG). Looking under the HBase Web UI I noticed that an HBase region was not online. Phoenix is unable to connect to HBase because the Meta data stored in the SYSTEM.CATALOG table is missing.

Full error log from jetty:

Tue Aug 26 08:31:57 PDT 2014, org.apache.hadoop.hbase.ipc.ExecRPCInvoker$1@232d1800, java.net.ConnectException: Connection refused: no further information

Tue Aug 26 08:31:58 PDT 2014, org.apache.hadoop.hbase.ipc.ExecRPCInvoker$1@232d1800, org.apache.hadoop.hbase.ipc.HBaseClient$FailedServerException: This server is in the failed servers list: servername30/192.168.210.205:60020

Tue Aug 26 08:34:03 PDT 2014, org.apache.hadoop.hbase.ipc.ExecRPCInvoker$1@232d1800, org.apache.hadoop.hbase.NotServingRegionException: org.apache.hadoop.hbase.NotServingRegionException: Region is not online: SYSTEM.CATALOG,,1395080753790.e49d72ecc717d4e369307c888625d247.

I outlined the resolution steps below:

- CAW failed on a request to the jetty REST API (running on servername04).

- Mike discovered an error in the /var/log/jetty/ log: org.apache.hadoop.hbase.NotServingRegionException: Region is not online: SYSTEM.CATALOG

- The API test failed (http://servername04:22100/v1/ProductCatalog/A342B/Products?clientToken=G4563K4634KS9&partnerProductId=117853,116517&fields=(requestId,Products,ProductProperties,uuid,partnerProductId,productURL,ProductGroups,ProductGroupProperties,code,name,value)&requestId=5449ff0a-098b-486f-8f25-edf00955bc8c).

- I noticed one HBase RegionServer, servername11, was in a bad state and I restarted the RegionServer. The problem was not resolved.

- I looked into the HBase Master Web UI and noticed that the SYSTEM.CATALOG was not assigned to a RegionServer and there were zero Online Regions (http://servername03:60010/master-status?filter=general) (state=FAILED_OPEN).

- I restarted the entire HBase service. The problem was not resolved.

- From a working node I ran an HBase check in the hbase shell and the table was repaired:

- If this does not resolve the problem, you can dig into the details of the error:

- If you see errors like this: First region should start with an empty key, there is a hole in the region chain between x and x, then you need to attempt to repair the Region.

- hbck reference: http://hbase.apache.org/book/hbck.in.depth.html. When repairing a corrupted HBase, it is best to repair the lowest risk inconsistencies first. These are generally region consistency repairs — localized single region repairs that only modify in-memory data, ephemeral zookeeper data, or patch holes in the META table. Region consistency requires that the HBase instance has the state of the region’s data in HDFS (.regioninfo files), the region’s row in the hbase:meta table., and region’s deployment/assignments on region servers and the master in accordance. Options for repairing region consistency include:

sudo -u hbase hbase hbck -fixAssignments

sudo -u hbase hbase hbck -details

ERROR: (region TABLE_NAME,07f94116-12c4-426e-a8b4-29ceeeb63c2a,1404918909107.71a4ab1524f013f6697368f0f55e6ca8.) First region should start with an empty key. You need to create a new region and regioninfo in HDFS to plug the hole.

ERROR: There is a hole in the region chain between 200de7b1-7f16-4ff6-b94f-6717ecec8ae6 and 506e3776-1bc1-49e9-876f-f1a2a28f5154. You need to create a new .regioninfo and region dir in hdfs to plug the hole.

-fixAssignments (equivalent to the 0.90 -fix option) repairs unassigned, incorrectly assigned or multiply assigned regions.

-fixMeta which removes meta rows when corresponding regions are not present in HDFS and adds new meta rows if they regions are present in HDFS while not in META.

To fix deployment and assignment problems you can run this command:

sudo -u hbase hbase hbck -fixAssignments

11. To fix deployment and assignment problems as well as repairing incorrect meta rows you can run this command:

12. There are a few classes of table integrity problems that are low risk repairs. The first two are degenerate (startkey == endkey) regions and backwards regions (startkey > endkey). These are automatically handled by sidelining the data to a temporary directory (/hbck/xxxx). The third low-risk class is hdfs region holes. This can be repaired by using the:

sudo -u hbase hbase hbck -fixAssignments -fixMeta

-fixHdfsHoles option for fabricating new empty regions on the file system. If holes are detected you can use -fixHdfsHoles and should include -fixMeta and -fixAssignments to make the new region consistent.

sudo -u hbase hbase hbck -fixAssignments -fixMeta -fixHdfsHoles

13. Since this is a common operation, we’ve added a the -repairHoles flag that is equivalent to the previous command:

14. If inconsistencies still remain after these steps, you most likely have table integrity problems related to orphaned or overlapping regions.

15. To fix reference files, run this command (to fix this type of error: ERROR: Found lingering reference file hdfs://servername02:8020/hbase/data/default/TABLE_NAME/8a3a20307c5dc5d104b439eb48289a66/recovered.edits/0000000000120139909.temp):

sudo -u hbase hbase hbck -repairHoles

sudo -u hbase hbase hbck -fixReferenceFiles

# If fixReferenceFiles failed, repair:

sudo -u hbase hbase hbck -repair

# If the neither of above 2 works then move the *.temp files out of recovered.edits into /tmp – so just run this:

sudo -u hdfs hadoop fs -mv /hbase/data/default/TABLE_NAME/8a3a20307c5dc5d104b439eb48289a66/recovered.edits/0000000000120139909.temp /tmp

# but this effectively destroys data, data, so use sparingly!

Rebuild the ZooKeeper znodes

If the above instructions do not work, you might have to look at ZooKeeper. This situation can also happen when the .META. table and the HBase ZooKeeper znodes get out of sync.

If you are:

– not using peer replication (to another cluster)

– not using HBase Snapshots

– able to take down the HBase service for a restart

- Stop the HBase service

- Use the ‘hbase zkcli’ command to enter the ZooKeeper command line

- From ZooKeeper’s command line, run: rmr /hbase # this deletes the HBase znodes

- quit out of ZooKeeper

- From the command line, run: sudo -u hbase hbase org.apache.hadoop.hbase.util.hbck.OfflineMetaRepair # this is an offline hbck

- Restart the HBase service

HBase will rebuild its znode tree upon restart. If that doesn’t immediately repair the problem, I’ve found that sometimes you have to re-run the ‘sudo -u hbase hbase hbck -fix’ command.

HBase: Stopped: Unhandled: org.apache.hadoop.hbase.ClockOutOfSyncException: Failed deleting my ephemeral node

If you find the ClockOutOfSyncException that led to the Failed deleting my ephermal node, you have to fix time! Restart ntp or whatever it takes.

STOPPED: Unhandled: org.apache.hadoop.hbase.ClockOutOfSyncException: Server servername08,60020,1406842783275 has been rejected; Reported time is too far out of sync with master. Time difference of 3320455ms > max allowed of 30000ms

at org.apache.hadoop.hbase.master.ServerManager.checkClockSkew(ServerManager.java:316)

at org.apache.hadoop.hbase.master.ServerManager.regionServerStartup(ServerManager.java:216)

at org.apache.hadoop.hbase.master.HMaster.regionServerStartup(HMaster.java:1296)

at org.apache.hadoop.hbase.protobuf.generated.RegionServerStatusProtos$RegionServerStatusService$2.callBlockingMethod(RegionServerStatusProtos.java:5085)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2012)

Failed deleting my ephemeral node

org.apache.zookeeper.KeeperException$NoNodeException: KeeperErrorCode = NoNode for /hbase/rs/0.0.0.0,60020,1406842783275

at org.apache.zookeeper.KeeperException.create(KeeperException.java:111)

at org.apache.zookeeper.KeeperException.create(KeeperException.java:51)

at org.apache.zookeeper.ZooKeeper.delete(ZooKeeper.java:873)

at org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper.delete(RecoverableZooKeeper.java:156)

at org.apache.hadoop.hbase.zookeeper.ZKUtil.deleteNode(ZKUtil.java:1273)

HBase Started with Bad Health: Address is already in use

Error: If you receive the following error, you will have to restart Zookeeper:

3:48:55.096 PM ERROR org.apache.hadoop.hbase.master.HMasterCommandLine

Master exiting

java.lang.RuntimeException: Failed construction of Master: class org.apache.hadoop.hbase.master.HMaster

at org.apache.hadoop.hbase.master.HMaster.constructMaster(HMaster.java:1948)

at org.apache.hadoop.hbase.master.HMasterCommandLine.startMaster(HMasterCommandLine.java:152)

at org.apache.hadoop.hbase.master.HMasterCommandLine.run(HMasterCommandLine.java:104)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:76)

at org.apache.hadoop.hbase.master.HMaster.main(HMaster.java:1962)

Caused by: java.net.BindException: Address already in use

at sun.nio.ch.Net.bind(Native Method)

at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:126)

at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:59)

at org.apache.hadoop.hbase.ipc.HBaseServer.bind(HBaseServer.java:256)

at org.apache.hadoop.hbase.ipc.HBaseServer$Listener.<init>(HBaseServer.java:484)

at org.apache.hadoop.hbase.ipc.HBaseServer.<init>(HBaseServer.java:1561)

Solution: Restart Zookeeper.

HBase Will Not Start: Address Already in Use

If you receive the following error, you will have to restart Zookeeper:

3:48:55.096 PM ERROR org.apache.hadoop.hbase.master.HMasterCommandLine

Master exiting

java.lang.RuntimeException: Failed construction of Master: class org.apache.hadoop.hbase.master.HMaster

at org.apache.hadoop.hbase.master.HMaster.constructMaster(HMaster.java:1948)

at org.apache.hadoop.hbase.master.HMasterCommandLine.startMaster(HMasterCommandLine.java:152)

at org.apache.hadoop.hbase.master.HMasterCommandLine.run(HMasterCommandLine.java:104)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:76)

at org.apache.hadoop.hbase.master.HMaster.main(HMaster.java:1962)

Caused by: java.net.BindException: Address already in use

HBase Will Not Start: Port in Use

On a new single-node installation, if you receive the following error, you might have to delete the new cluster and create a new cluster from scratch. Obviously, this only works on a new cluster – on an existing cluster, you are going to have to figure out what is keeping port 60030 from starting (is another process using the port?):

2014-06-09 17:01:40,647 ERROR org.apache.hadoop.hbase.master.HMaster: Region server servername26,60020,1402358498360 reported a fatal error:

ABORTING region server servername26,60020,1402358498360: Unhandled exception: Port in use: 0.0.0.0:60030

Cause:

java.net.BindException: Port in use: 0.0.0.0:60030

at org.apache.hadoop.http.HttpServer.openListener(HttpServer.java:730)

at org.apache.hadoop.http.HttpServer.start(HttpServer.java:674)

at org.apache.hadoop.hbase.regionserver.HRegionServer.putUpWebUI(HRegionServer.java:1757)

HBase: Cannot Start HBase Master when HBase RegionServer is Started: SplitLogManager: Error Splitting

I could not start HBase on servername01. What happened is the VM went down unexpectedly and HBase was not able to restart reading from the transaction log because it had become unusable (corrupt). I had to move away the broken file before restarting the Master node. This also happened after an HBase upgrade which included a major Phoenix upgrade.

Logs: sudo vi /var/log/hbase/hbase-cmf-hbase1-MASTER-servername01.log.out

In the log file, look for the file that cannot be split: hdfs://servername01:8020/hbase/.logs/servername01,60020,1393982440484-splitting

Then search hdfs for the file:

sudo -u hdfs hadoop fs -ls /hbase/.logs #… / add the filename

Note that the file is 0 KB. Next, move the offending file – you may have to move the file BACK after HBase is running again:

sudo -u hdfs hadoop fs -mv /hbase/.logs/servername01,60020,1393982440484-splitting /tmp/servername01,60020,1393982440484-splitting.old

Restart the HBase Master service. The splitting log file can be replayed back to recover any lost data. To repair the damaged file:

- Move the file back

- Run the hbase hbck commands to fix meta data (FixHbaseRegion)

- Run through the hbck commands until 0 inconsistencies are found.

Error: 2014-06-12 09:42:52,782 INFO org.apache.hadoop.hbase.master.SplitLogManager: task /hbase/splitlog/hdfs%3A%2F%2Fservername01%3A8020%2Fhbase%2F.logs%2Fservername01%2C60020%2C1393982440484-splitting%2Fservername01%252C60020%252C1393982440484.1394037615142 entered state err servername01,60020,1402591342834

2014-06-12 09:42:52,782 WARN org.apache.hadoop.hbase.master.SplitLogManager: Error splitting /hbase/splitlog/hdfs%3A%2F%2Fservername01%3A8020%2Fhbase%2F.logs%2Fservername01%2C60020%2C1393982440484-splitting%2Fservername01%252C60020%252C1393982440484.1394037615142

2014-06-12 09:42:52,783 WARN org.apache.hadoop.hbase.master.SplitLogManager: error while splitting logs in [hdfs://servername01:8020/hbase/.logs/servername01,60020,1393982440484-splitting] installed = 1 but only 0 done

2014-06-12 09:42:52,783 FATAL org.apache.hadoop.hbase.master.HMaster: Master server abort: loaded coprocessors are: []

2014-06-12 09:42:52,783 FATAL org.apache.hadoop.hbase.master.HMaster: Unhandled exception. Starting shutdown.

java.io.IOException: error or interrupted while splitting logs in [hdfs://servername01:8020/hbase/.logs/servername01,60020,1393982440484-splitting] Task = installed = 1 done = 0 error = 1

at org.apache.hadoop.hbase.master.SplitLogManager.splitLogDistributed(SplitLogManager.java:299)

at org.apache.hadoop.hbase.master.MasterFileSystem.splitLog(MasterFileSystem.java:371)

at org.apache.hadoop.hbase.master.MasterFileSystem.splitAllLogs(MasterFileSystem.java:341)

at org.apache.hadoop.hbase.master.MasterFileSystem.splitAllLogs(MasterFileSystem.java:288)

at org.apache.hadoop.hbase.master.HMaster.finishInitialization(HMaster.java:642)

Additional Notes:

After a long Google search, I came across a way to fix HBase. For this to work, I had to shut down the HBase RegionServer and start the HBase Master. But then it complained about the RegionServer. So this didn’t work. To start the HBase Master I would have had to move away the splitting file, and move the file back after the HBase Master was running. To see what it would do I ran the command on a working node – see more hbase hbck commands for meta data fixes, etc.:

sudo -u hbase hbase hbck -fix

Number of Tables: 5

Number of live region servers: 1

Number of dead region servers: 0

Master: servername01,60000,1402597488665

Number of backup masters: 0

Number of empty REGIONINFO_QUALIFIER rows in .META.: 0

Summary:

-ROOT- is okay.

Number of regions: 1

Deployed on: servername01,60020,1402597096844

.META. is okay.

Number of regions: 1

Deployed on: servername01,60020,1402597096844

TestTable1 is okay.

Number of regions: 1

Deployed on: servername01,60020,1402597096844

0 inconsistencies detected.

Status: OK

HBase: Cannot Start HBase: RegionServer Will Not Start: IndexedWALEditCodec Class Not Found

Test HBase:

hbase shell

list

list ‘TABLE_NAME’

scan ‘TABLE_NAME’

Digging through log files:

HBase RegionServer: /var/log/hbase/hbase-cmf-hbase1-REGIONSERVER-servername05.log.out

Resolution: I discovered that we were missing a class. I tracked this down to phoenix. The jar file was deleted during the upgrade from CDH 4.6 to CDH 4.7.

You will see the configuration for Phoenix in the RegionServer Advanced Configuration Snippet (Safety Valve) for hbase-site.xml folder, look for the WAL value:

<value>org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec</value>

This line from the log file was the clue: Class org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec not found

Just add the correct Phoenix parameters and you should be able to start the RegionServer.

ABORTING region server servername05,60020,1403044136969: Unhandled exception: Exception in createWriter

java.io.IOException: Exception in createWriter

at org.apache.hadoop.hbase.regionserver.wal.HLogFileSystem.createWriter(HLogFileSystem.java:66)

at org.apache.hadoop.hbase.regionserver.wal.HLog.createWriterInstance(HLog.java:709)

at org.apache.hadoop.hbase.regionserver.wal.HLog.rollWriter(HLog.java:629)

at org.apache.hadoop.hbase.regionserver.wal.HLog.rollWriter(HLog.java:573)

at org.apache.hadoop.hbase.regionserver.wal.HLog.<init>(HLog.java:454)

Caused by: java.io.IOException: cannot get log writer

at org.apache.hadoop.hbase.regionserver.wal.HLog.createWriter(HLog.java:802)

at org.apache.hadoop.hbase.regionserver.wal.HLogFileSystem.createWriter(HLogFileSystem.java:60)

… 10 more

Caused by: java.lang.RuntimeException: java.lang.RuntimeException: java.lang.ClassNotFoundException: Class org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec not found

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:1806)

at org.apache.hadoop.hbase.regionserver.wal.WALEditCodec.create(WALEditCodec.java:86)

at org.apache.hadoop.hbase.regionserver.wal.SequenceFileLogWriter.init(SequenceFileLogWriter.java:199)

at org.apache.hadoop.hbase.regionserver.wal.HLog.createWriter(HLog.java:799)

… 11 more

Caused by: java.lang.RuntimeException: java.lang.ClassNotFoundException: Class org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec not found

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:1774)

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:1798)

… 14 more

Caused by: java.lang.ClassNotFoundException: Class org.apache.hadoop.hbase.regionserver.wal.IndexedWALEditCodec not found

at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:1680)

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:1772)

… 15 more

HBase Master: /var/log/hbase/hbase-cmf-hbase1-MASTER-servername02.log.out

Failed assignment of -ROOT-,,0.70236052 to servername05,60020,1403044475111, trying to assign elsewhere instead; retry=9

org.apache.hadoop.hbase.client.RetriesExhaustedException: Failed setting up proxy interface org.apache.hadoop.hbase.ipc.HRegionInterface to servername05/192.168.210.245:60020 after attempts=1

at org.apache.hadoop.hbase.ipc.HBaseRPC.handleConnectionException(HBaseRPC.java:263)

at org.apache.hadoop.hbase.ipc.HBaseRPC.waitForProxy(HBaseRPC.java:231)

at org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation.getHRegionConnection(HConnectionManager.java:1473)

at org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation.getHRegionConnection(HConnectionManager.java:1432)

at org.apache.hadoop.hbase.client.HConnectionManager$HConnectionImplementation.getHRegionConnection(HConnectionManager.java:1419)

Caused by: java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:567)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:207)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:528)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:492)

Attempt to fix HBase:

sudo -u hbase hbase hbck -fix

But this may get stuck because it cannot connect to a RegionServer.

HBase: Unable to start SinkRunner

This is a known issue, the HBase client API picks up the zoo.cfg file if it is in the classpath (this is not Flume specific). As of now, the only workaround is to remove/rename the zoo.cfg file.

In relation to Phoenix, make sure the most current Phoenix driver is located in the /opt/cloudera/parcels/CDH/lib/hbase/lib/ folder, we do this through Continuous Deployment.

Reference:https://issues.cloudera.org/browse/DISTRO-438

HBase: Unable to start Master: TableExistsException: hbase:namespace

Error: org.apache.hadoop.hbase.master.TableNamespaceManager

Namespace table not found. Creating…

Jul 21, 1:23:40.026 PM FATAL org.apache.hadoop.hbase.master.HMaster

Failed to become active master

org.apache.hadoop.hbase.TableExistsException: hbase:namespace

at org.apache.hadoop.hbase.master.handler.CreateTableHandler.checkAndSetEnablingTable(CreateTableHandler.java:152)

at org.apache.hadoop.hbase.master.handler.CreateTableHandler.prepare(CreateTableHandler.java:125)

at org.apache.hadoop.hbase.master.TableNamespaceManager.createNamespaceTable(TableNamespaceManager.java:233)

at org.apache.hadoop.hbase.master.TableNamespaceManager.start(TableNamespaceManager.java:86)

at org.apache.hadoop.hbase.master.HMaster.initNamespace(HMaster.java:902)

Jul 21, 1:23:40.026 PM FATAL org.apache.hadoop.hbase.master.HMaster

Master server abort: loaded coprocessors are: []

Jul 21, 1:23:40.026 PM FATAL org.apache.hadoop.hbase.master.HMaster

Unhandled exception. Starting shutdown.

org.apache.hadoop.hbase.TableExistsException: hbase:namespace

at org.apache.hadoop.hbase.master.handler.CreateTableHandler.checkAndSetEnablingTable(CreateTableHandler.java:152)

at org.apache.hadoop.hbase.master.handler.CreateTableHandler.prepare(CreateTableHandler.java:125)

at org.apache.hadoop.hbase.master.TableNamespaceManager.createNamespaceTable(TableNamespaceManager.java:233)

at org.apache.hadoop.hbase.master.TableNamespaceManager.start(TableNamespaceManager.java:86)

at org.apache.hadoop.hbase.master.HMaster.initNamespace(HMaster.java:902)

Jul 21, 1:23:40.027 PM INFO org.apache.hadoop.hbase.regionserver.HRegionServer

STOPPED: Unhandled exception. Starting shutdown.

Resolution: This problem happened after deleting and recreating HBase. Apparently ZooKeeper remember the old meta data from the old setup. Clear this and restart.

Clear ZK znode:

zookeeper-client

rmr /hbase

If needed ONLY, clear the HBase data from HDFS – ONLY DO THIS IF ABSOLUTELY NECESSARY AS ALL HBASE DATA WILL BE LOST:

sudo -u hdfs hadoop fs -rm -r /hbase/*